딥러닝

- 뉴럴 네트워크의 사용을 의미함.

- deep -> a neural network with many hidden layers

Vector

- input : feature vector

- output : label

- 벡터의 크기는 언제나 고정. 이 크기가 변한다는 것은 뉴럴 네트워크를 다시 만든다는 것과 같은 의미.

- 단, CNNs에서는 뉴럴 네트워크 변동없이 벡터 크기 변경이 허용됨.

Dimension

- How many elements are in that 1D array.

- For example, a neural network with ten input neurons has ten dimensions.

- Input data dimension

- 1D vector - Classic input to a neural network, similar to rows in a spreadsheet. Common in predictive modeling.

- 2D Matrix - Grayscale image input to a CNN.

- 3D Matrix - Color image input to a CNN.

- nD Matrix - Higher-order input to a CNN.

- You might have a 2D input to a neural network with 64x64 pixels. This configuration would result in 4,096 input neurons. This network is either 2D or 4,096D, depending on which dimensions you reference.

Classification / Regression

- Regression - You expect a number as your neural network's prediction.

- Classification - You expect a class/category as your neural network's prediction.

Neurons and Layers

ANN(Artificial Neuron Network)

artificial neuron은 input, weight을 각각 곱하고 그 합을 activation function에 넣어준다. 그 결과 단 하나의 output만 발생.

x : the input of the neuron

θ : weights of the neuron

ϕ (phi) : an activation function

[ F-ANN 특징 ]

- 같은 layer의 뉴런은 동일한 activation function 공유 (activation function은 layer마다 다를 수 있음)

- 한 층의 모든 뉴런은 이전 층의 모든 뉴런과 연결되어 있음

화살표 방향이 input에서 output으로만 향하고 있는데, 재귀적으로 동작하는 모델도 있음 : Feedforward Neural Network

Types of Neurons

There are usually four types of neurons in a neural network:

- Input Neurons - We map each input neuron to one element in the feature vector.

- Hidden Neurons - Hidden neurons allow the neural network to be abstract and process the input into the output.

- Output Neurons - Each output neuron calculates one part of the output.

- Bias Neurons - Work similar to the y-intercept of a linear equation.

We place each neuron into a layer:

- Input Layer - The input layer accepts feature vectors from the dataset. Input layers usually have a bias neuron.

- Output Layer - The output from the neural network. The output layer does not have a bias neuron.

- Hidden Layers - Layers between the input and output layers. Each hidden layer will usually have a bias neuron.

Bias Neurons

- Function like an input neuron that always produces a value of 1.

- They are not connected to the previous layer.

- Except for the output layer, every level includes a single bias neuron.

Why are Bias Neurons Needed?

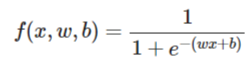

시그모이드 함수를 예로 들자면, (x : input, w : weight, b : bias)

좌측 그림에서 bias가 없을 때 w가 달라지면 그래프의 기울기가 모두 달라지지만 x가 0일 때는 모두 0.5로 동일한 값을 갖는다.

우측 그림처럼 bias 값이 달라지면 동일한 w를 가지더라도 x값이 함수마다 모두 달라진다. bias가 sigmoid curve를 shift하기 때문.

Modern Activation Functions

- Rectified Linear Unit (ReLU) - Used for the output of hidden layers. [Cite:glorot2011deep]

- Softmax - Used for the output of classification neural networks.

- Linear - Used for the output of regression neural networks (or 2-class classification).

1. Rectified Linear Units (ReLU)

2. Softmax

- It ensures that all of the output neurons sum to 1.0.

- This makes it very useful for classification where it shows the probability of each of the classes.

3. Why ReLU?

뉴럴 네트워크가 경사하강법을 사용하기 위해

반면 ReLU를 사용하면 이러한 문제를 해결할 수 있음

'◦ Machine Learning > Deep Learning' 카테고리의 다른 글

| [딥러닝] Introduction to Tensorflow & Keras (0) | 2023.06.13 |

|---|